https://www.oreilly.com/ideas/introducing-capsule-networks?cmp=tw-data-na-article-ainy18_thea

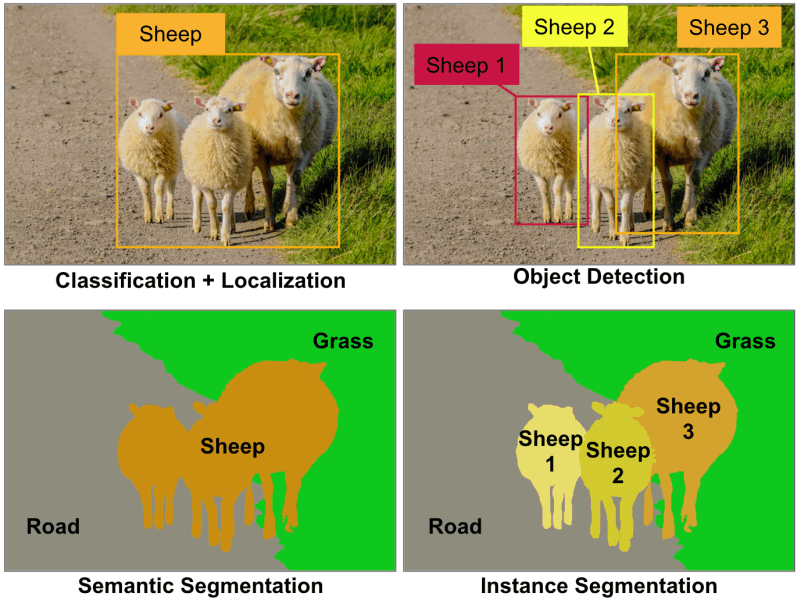

From classification to instance segmentation

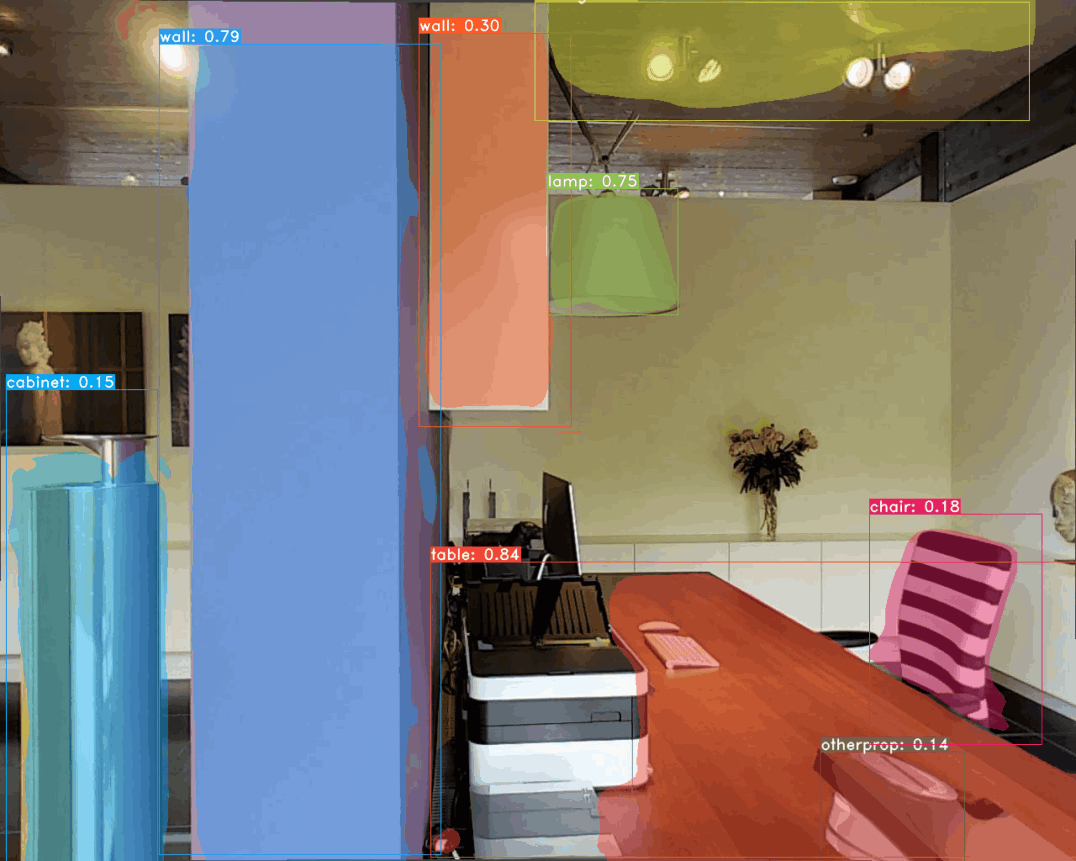

A basic approach to understand a scene visually is to classify it based on the objects present. Classification alone just assign a global label to the image. In addition, the areas that contributed to the classification can be localized. Object detection find instances of specific object classes, and localizes them, typically with a bounding box. Semantic segmentation obtains a dense labelling of the image, providing a class label for every pixel. However, neighboring or overlapping objects belonging to the same class are merged. Instance segmentation also provides pixel-based labels, but separates the objects, and provides a label for each object class.